0

180° Video

Videos showing 180° of a scene from the user’s point of view, meaning that the video is limited to nearly the same line of sight as the human eye and ‘cut off’ on either side of the viewer’s head, at the edges of their line of sight. In contrast to 360° video, this setup allows for a more directed viewing experience and reduces the amount of movement needed. Limiting the field of view to 180° also avoids stretching pixels as much, providing a much sharper (see: higher resolution) image than a 360° video would when viewing the same space.

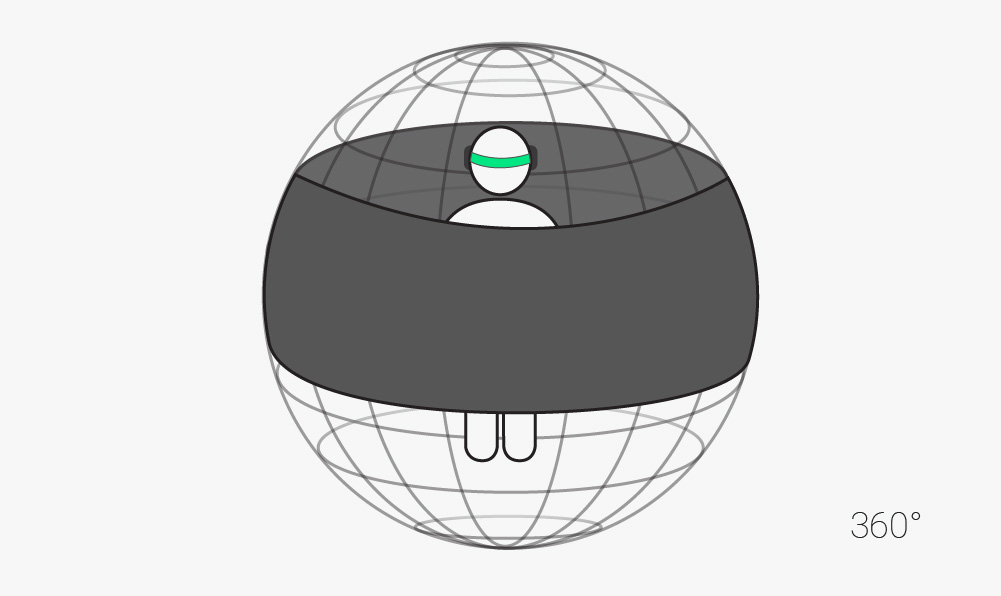

360° Video

Videos showing 360° of a scene from the user’s point of view. This means that the entire scene is visible in all directions, allowing the viewer to look anywhere without cutting off and breaking immersion. While this improves and expands the viewer’s experience, it can also lead to a lower resolution in 360° videos, as the original image has to be stretched more, resulting in a lower resolution image from any given point of view.

A

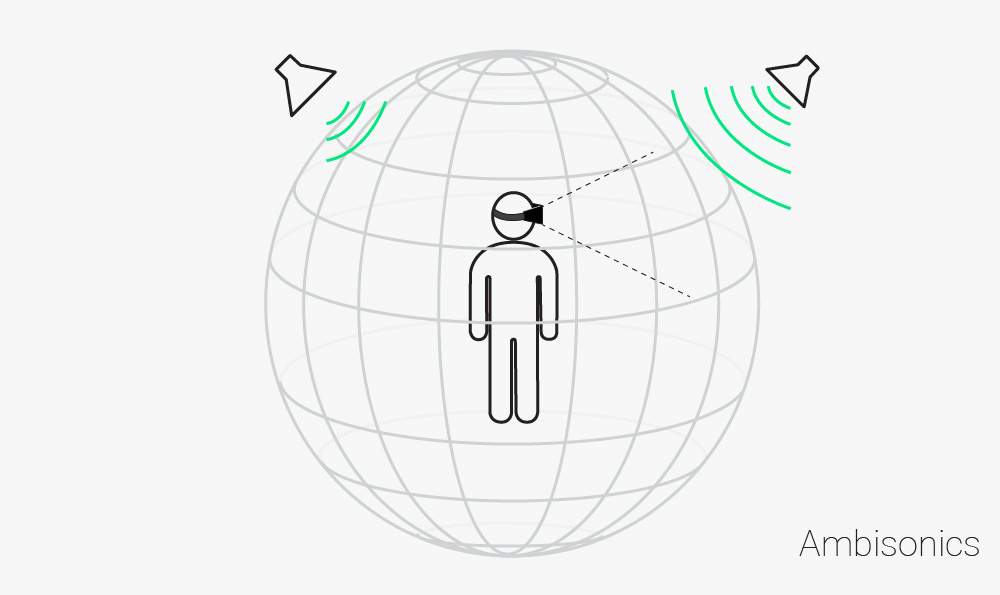

Ambisonics

Ambisonics is the name given to a method of recording and reproducing sounds in 360°. This is done using a special array of at least 4 microphones to capture sounds from every direction. These sounds are then stored and decoded for use with surround sound and 360° videos. They provide particularly clear recordings and can represent every bit of audio information within the recorded area. However, since an ambisonics device can only record sounds from a single location, it is not as useful in the context of a larger 3D environment or when simulating movement.

Aspect Ratio

Aspect ratio refers to the ratio of vertical lines of pixels to horizontal lines of pixels on a screen, i.e., the width of the screen as compared to the height of the screen, usually presented in form width:height. If the aspect ratio would extend past the edges of the screen, most often any image or video shown in that aspect ratio are fit to the screen, and the edges that are left unused are cut off, preserving resolution but wasting screen space. Changing aspect ratios in VR can warp images and make the viewer nauseous, as an incorrect aspect ratio can lead to artificial cutoffs or slightly distorted visuals.

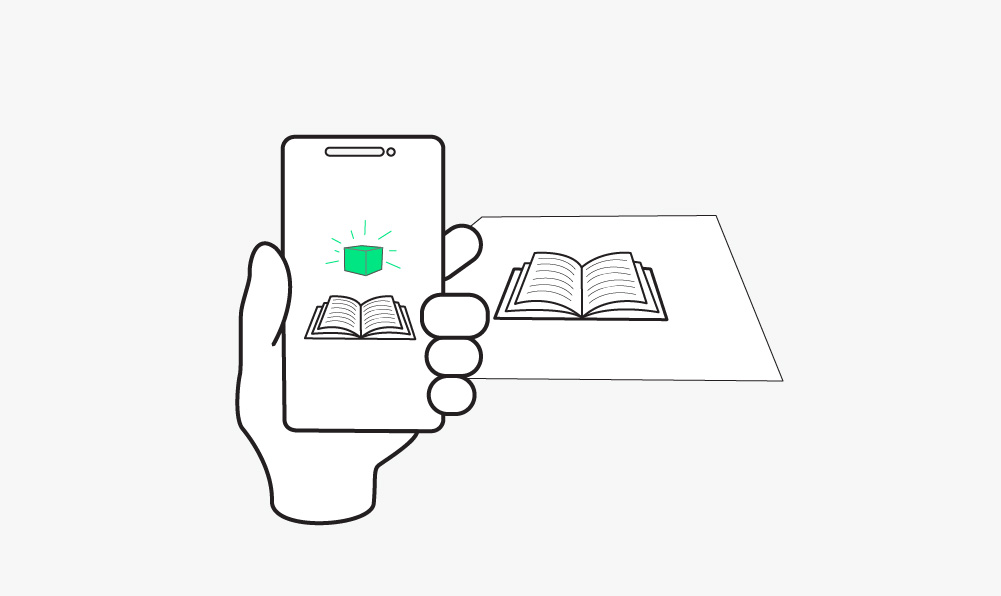

Augmented Reality

Augmented reality is a technology somewhat similar to virtual reality, but with a few key differences. Instead of trying to create an entirely separate world within the confines of VR gear and using it to replace the real world, it simply overlays visual or audio information over the real world as seen through the user’s eyes. It presents information relevant to what the user is seeing at any given time, or filters out other objects, as per the user’s needs. Although AR, like mixed reality technology, modifies the world in the user’s eyes, unlike in mixed reality, AR modifications are purely informative and are neither anchored to nor do they interact with the real world. In this, it is similar to HUD technology, albeit a much more advanced version of it. It has benefits in many different aspects of everyday life and can be useful in a variety of situations, as it can be programmed to assist the user with practically everything. Also, like the HUD, it frees the user from checking any other devices for information, as it presents any information the user needs independently from other devices.

B

C

Cardboard (Google)

Google Cardboard is a VR platform produced by Google to provide users with a low-cost alternative to other headsets that made from simple, cheap components. It can be purchased from Google premade or built using instructions found on their website. By downloading Google’s Cardboard App, users can view or build their own VR experiences as they wish. To use the platform, the user needs only to fold up the cardboard headset, run the cardboard app on a compatible phone, place the phone in front of the lenses, and then hold the headset in front of their eyes. While easy to use and inexpensive, Cardboard is not as flexible and powerful as other kinds of headgear and lacks many of the more expensive headgears’ special features.

Find out more here.

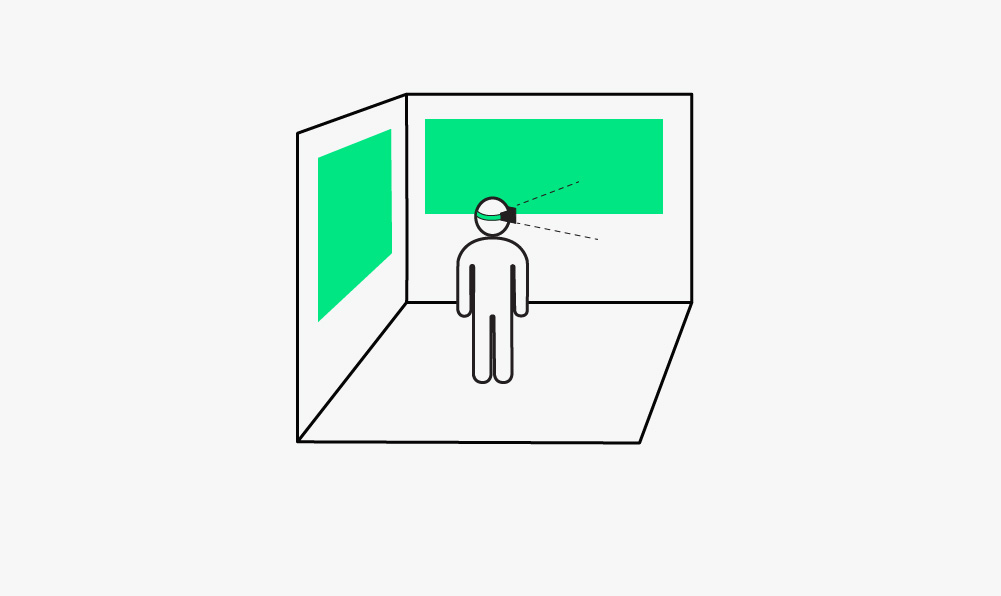

CAVE (Cave Automatic Virtual Environment)

A cave automatic virtual environment or CAVE uses projections on the walls and ceiling of a room to create the illusion of a real environment. A viewer can move around anywhere inside the cave, giving them the illusion of immersion. However, it is not possible to directly interact with the environment, since it consists only of projections and leaves the viewer feeling somewhat disconnected from their surroundings.

D

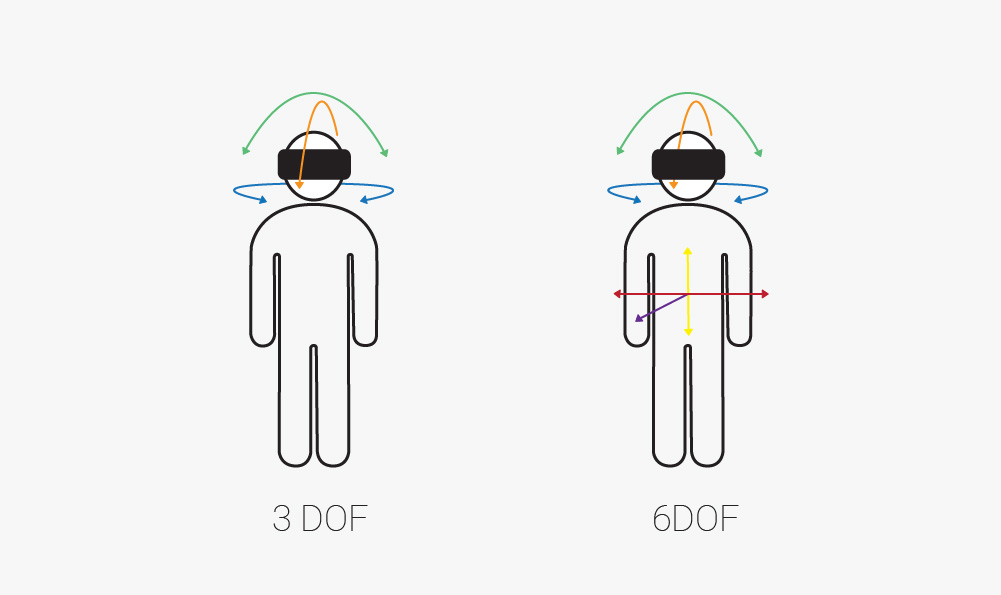

Degrees of Freedom (6DOF, 3DOF)

Degrees of freedom or DOF refers to the different degrees of movement available to an object inside a space. There are six types of movement that can be further divided into translation (straight line movement in a specific direction) and rotation (a movement about the x-, y-, or z-axis) movesets. For instance, hitting a baseball with a baseball bat is not a single movement, but a complex combination of rotations and translations performed at the same time. An object can freely translate along each of the three perpendicular axes. These movements constitute the first “three degrees of freedom”: surge (forward and backward motion), heave (upward and downward motion), and sway (leftward and rightward motion). An object can also simultaneously rotate along the three axes. These movements constitute the other three degrees of freedom: roll (tilting from side to side), pitch (tilting forwards and backwards), and yaw (tilting left and right). Together these add up to six degrees of freedom or 6DOF and can describe every possible movement of an object. This is an essential concept for correct positional tracking of human movement in a VR environment.

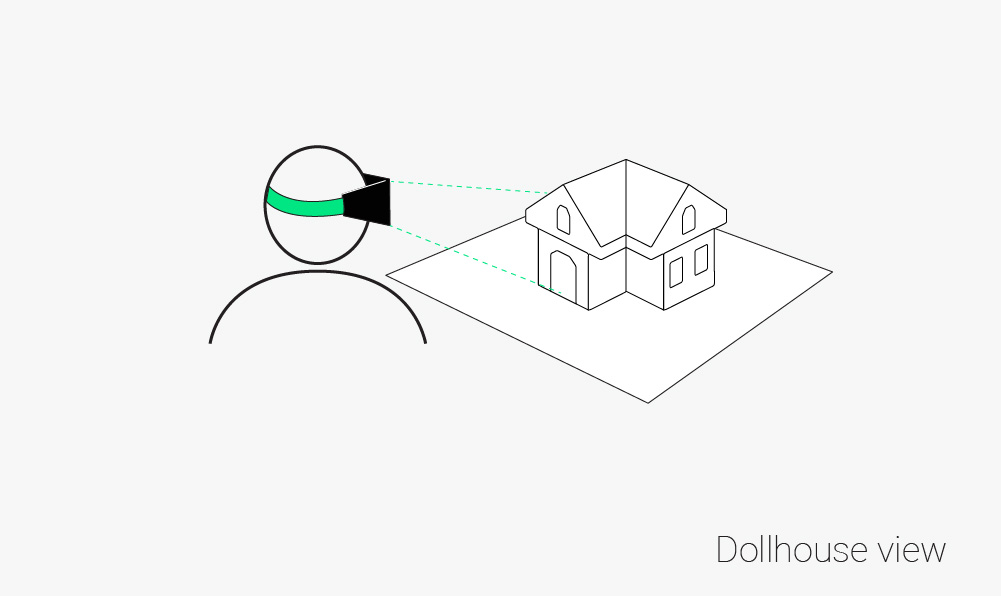

Dollhouse view

A dollhouse view refers to a zoomed out, usually top-down view of a given 3D space or structure from the outside. It enables designers to observe the entire area without moving around and view computer-modeled designs in their entirety before physical prototyping begins. This makes finding defects or errors much less labor-intensive and provides the viewer with a good idea of how something will look once built.

E

Equirectangular Projection/Video

In an equirectangular projection, a spherical image is mapped to a flat plane. A good example is a map of the earth, spread out on a flat surface instead of on a globe. Several equirectangular images recorded by several cameras at different angles can be merged together to form a spherical video utilizing a process called stitching.

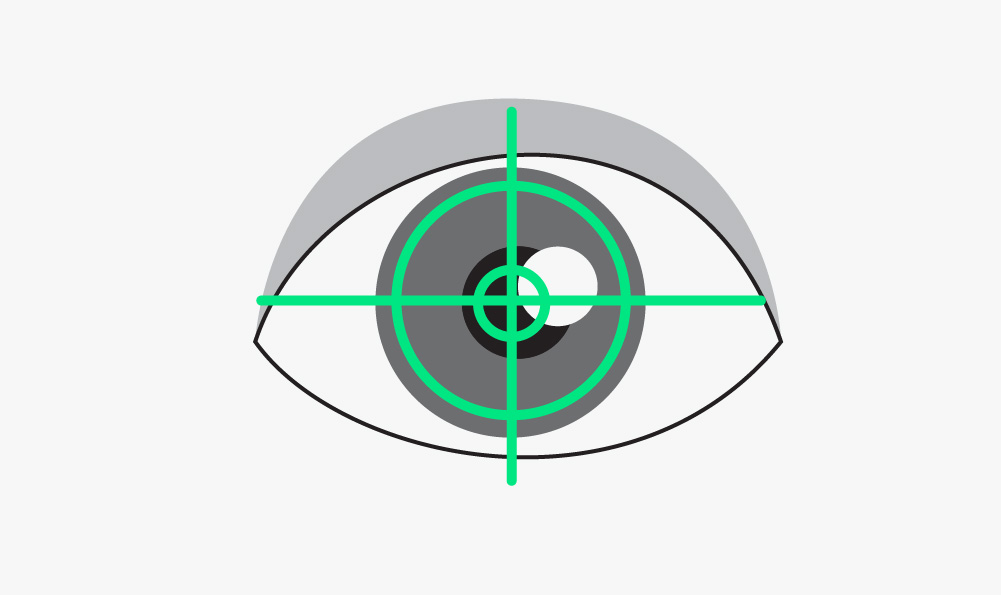

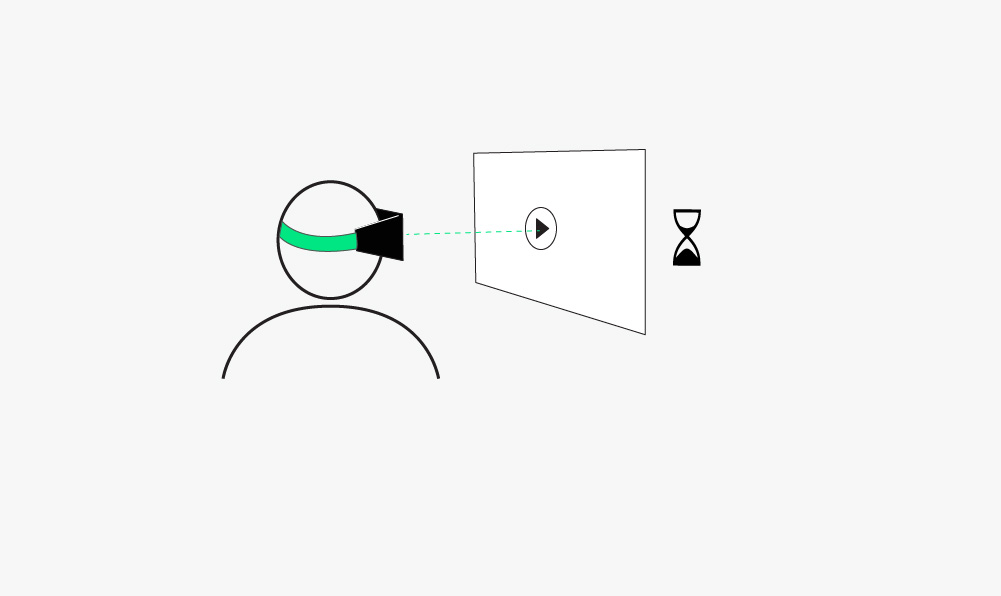

Eye tracking

Eye tracking is a process used in headsets to measure and keep track of the direction of the user’s gaze. Using this information, it is possible to reproduce the eyes’ natural process of bringing objects into/out of focus depending on what the user is concentrated on. Doing so enhances the feeling of immersion greatly, as simulating normal eye processes makes the users VR experience much more realistic and therefore less likely to break immersion.

F

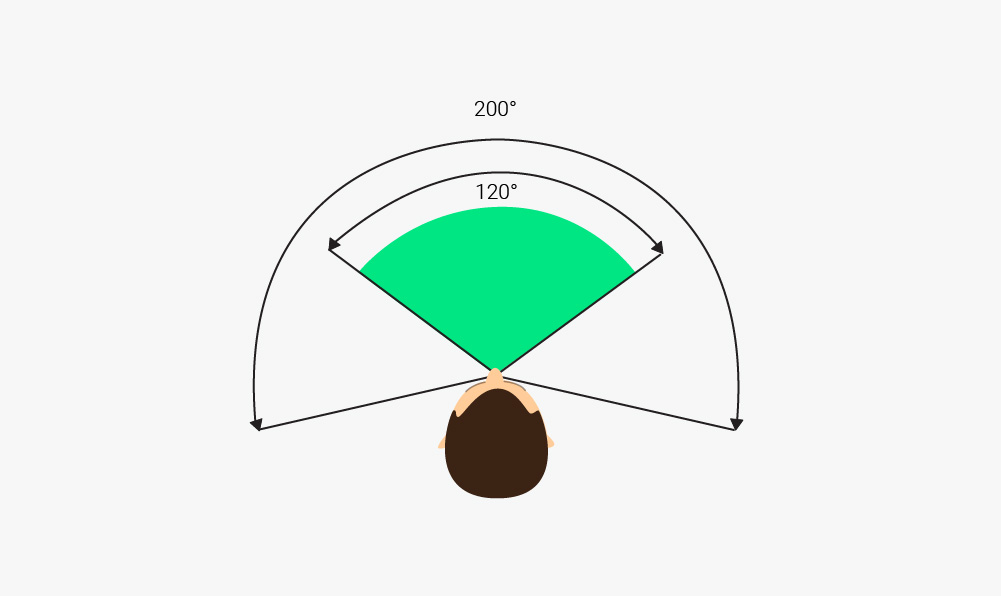

FOV (Field of View)

The field of view is the total number of degrees visible at any given moment from a given point of view. Most people’s field of view is approximately 200°; about ~120° of binocular vision and another ~40° of monocular vision on either side of this area which is covered only by one eye’s field of view. Extending the field of view in a visual medium can dramatically increase the amount of visible objects but can warp the image and break immersion if it deviates too far from “normal” field of view of the human eye or overloads the image with objects on the screen.

Frame Rate (30fps and 60fps)

Frame rates are the frequency at which an image/frame on a monitor is replaced by another. Each frame represents a still image to replace the previous image with, giving off the illusion of change/movement on a monitor. Generally, the two most common frame rates are 30 fps and 60fps, meaning 30 frames per second and 60 frames per second, respectively. The lower a frame rate, the fewer images are used to ‘bridge’ the gap between a previous scene shown and the next one, meaning that lower frame rates imply more changes/movement between images and thus jerkier or choppier movement. In contrast, high frame rates create a feeling of smoothness, as they have the benefit of using more images with progressively smaller changes for each second of content, so the eye sees less choppy movements; there are many tiny changes happening quickly in the course of one second rather than a few larger changes over the same second, which is much more noticeable. At low frame rates, the objects in each image seem to ‘teleport’ between frames if frame rates become too low due to the progressively larger changes between each image in order to get to the same one by the end of a second; the monitor ‘lingers’ on each image for a longer fraction of the second and, because of this, any movement communicated by the content is much jerkier than it would be at a higher frame rate. So, since 60fps uses twice as many frames in each second to convey the same motion, it will seem twice as smooth as the same motion translated into 30fps. In VR, maintaining a high frame rate is extremely important, as a low frame rate is very uncomfortable for the human eye and, by extension, the viewer. In order to preserve immersion and a sense of reality for the viewer, as well as avoid nausea, VR setups need to maintain at least 90 fps.

G

Gaze-based interaction

Gaze-based interactions refer to interactions between the user and the VR content, where the content is directly impacted by the user’s gaze, i.e., the direction the user is looking in when wearing a VR headset. This can be used, for example, to control a menu interface, to navigate within a virtual space or to interact with other characters in a VR gaming environment. The constant challenge with gaze-based interactions is tweaking the gaze timeout to find a good balance between accidental activation and waiting too long for the action.

H

Haptics

Haptics are a new way of providing feedback to the user for actions taken in virtual reality environments, physically simulating the expected results of the user’s movements, similar to vibration effects on controllers. When the user tries to grab or touch something in the VR setting, gloves or other gear worn by the user can simulate the pressure to the corresponding part of the user’s body and make it feel like the user is touching a virtual object. This produces an intense feeling of realism for the user, as objects touched by the user can be ‘felt’ and have a real presence, rather than being visible but impossible to touch. With the use of haptics, VR can close another gap between reality and VR experiences, as it gives the user a sense of touch corresponding to their actions along with the usual sense of sight and sound.

Head mounted display (HMD)

A head mounted display or HMD refers to a VR headset, basically a set of lenses combined with either an inbuilt display or attached smartphone in the form of a helmet or goggles that can be strapped around your head. Some contain a variety of sensors that can track the movement of the head. Others are simple arrangements of plastic and cardboard. They all have in common that they are used to deliver at least a minimal virtual reality experience by generating a 3D image.

A list of HMDs can be found here.

Head tracking

Head tracking is a process that monitors the current position and orientation of the user’s head. This is extremely important in VR as it allows the virtual point of view to follow around the user’s point of view, so the user can turn their head and see different angles of the same scene within the VR environment. In the context of immersion, this is an essential process, since it gives the user effortless freedom of movement, making the experience greatly more interactive and realistic.

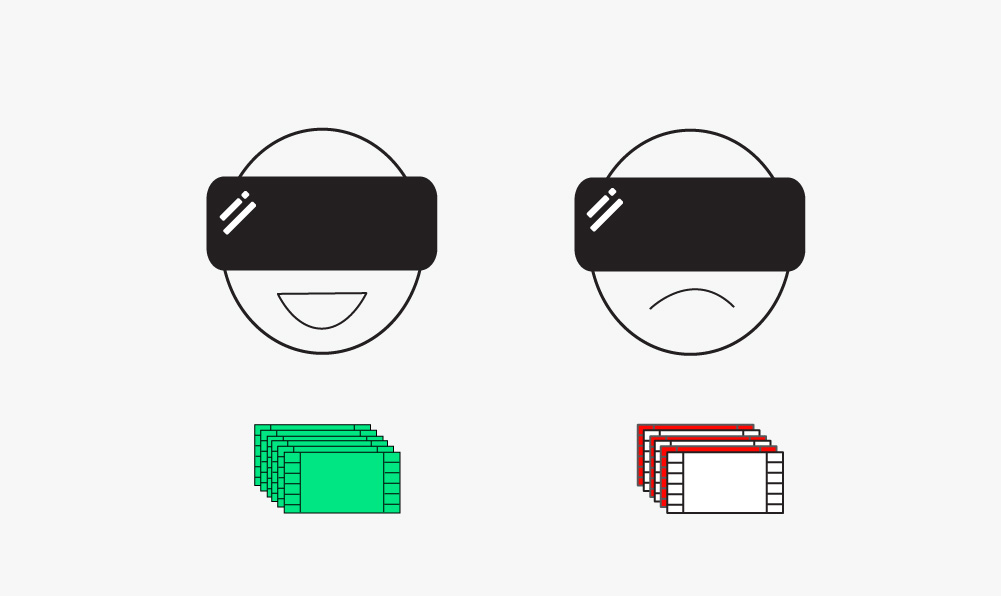

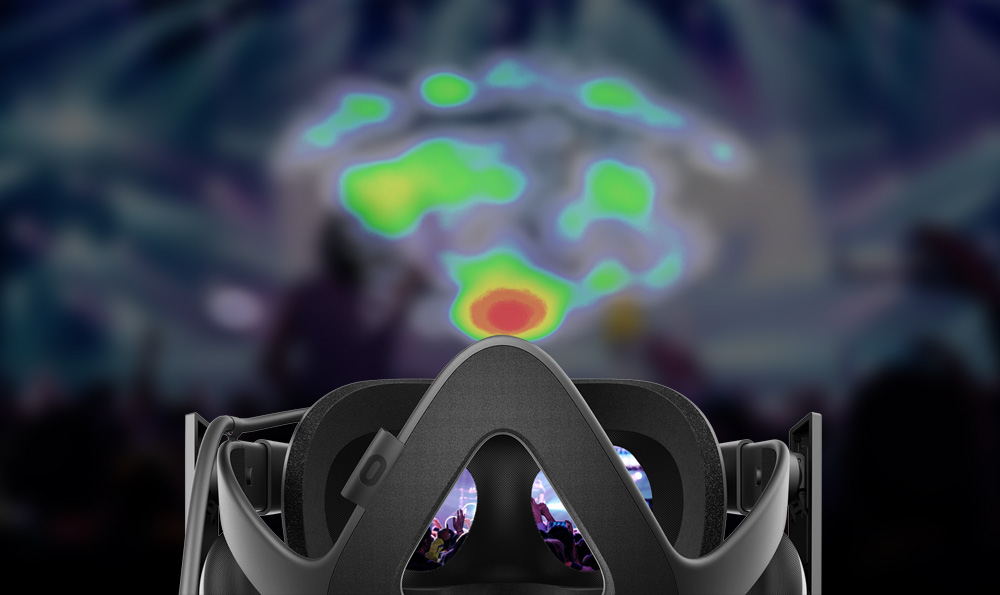

Heatmap

A heatmap is an analytical tool used to show what a user is looking at within a VR experience, graphical interface etc., It uses a system of color-coding, usually ranging from red (“hot”) to blue or green (“cold”), to create a graphical representation of the focus of the user’s attention. In the context of websites, heatmaps identify areas of interest by measuring cursor movement or clicks, while heatmaps in VR usually map the patterns of the user’s gaze.

HUD (head-up display)

The Heads-Up-Display is a way of showing data to the user without forcing them to look away from their current position, improving the user’s ability to view and identify relevant information and lowering the time it takes to do so. It enables the viewer, with a quick glance, to find out everything they need to know. If used in the context of entertainment programs, e.g., movies, videos, video games, it can lead to heightened levels of immersion, as it shows information in a much subtler way than a pause menu would. Information is usually presented in a semi-transparent fashion and on the edges of the viewer’s field of view so as not to obstruct the actual video or game in progress but is made easily readable through clever placement and bright colors.

I

Immersion

Immersion is the viewer’s sense of being part of a virtual environment. It is achieved when sound, design, atmosphere, visualization, etc. are able to create a sense of actually being in the virtual world. A developer’s goal is to perfect this set of stimuli to craft the most realistic, gripping user experience.

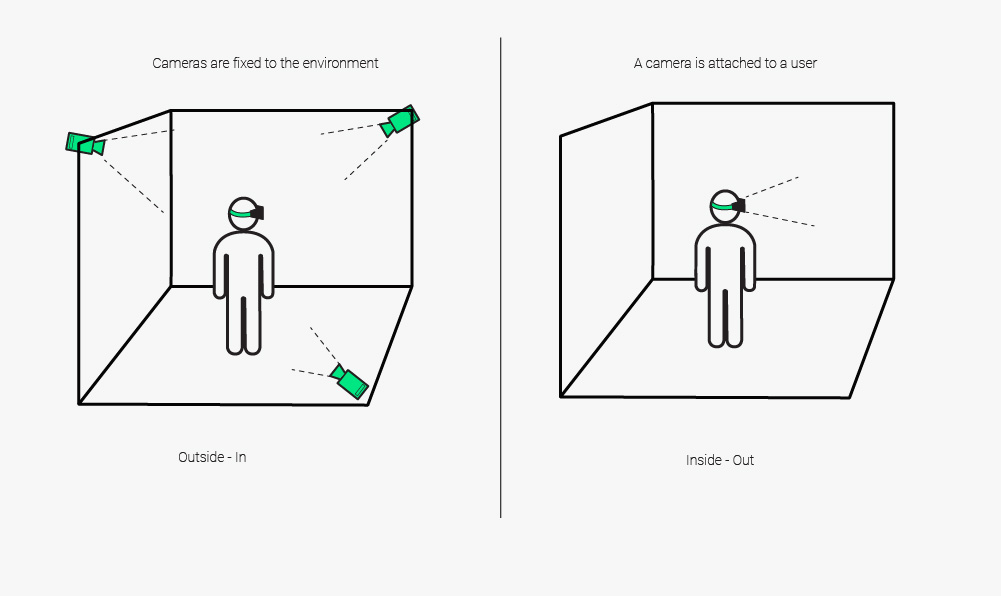

Inside-out tracking

Inside-out and outside-in tracking refer to the two different approaches to monitoring the user’s movements outside of VR to mirror them inside of the VR environment. Outside-in tracking focuses on placing cameras, sensors, and other tracking devices around the user to cover whatever area is used to simulate the virtual box the user will interact with. These devices trace the locations of any moving objects in the scene and translate them into virtual movements. While this process has both high accuracy and low latency, it suffers from a hard limit to its range since the sensors don’t move, as well as an inability to record anything outside of the line of sight of the sensors. Meanwhile, inside-out tracking uses the sensors placed inside of the VR headgear exclusively. The headset’s position is marked and continuously readjusted to keep track of the current position of the user in the VR environment, and the camera(s) on the headset try to discern pre-placed markers out of the user’s real surroundings, which serve as reference points for the user’s movements. However, inside-out tracking can function without the use of markers, as well; markerless inside-out tracking uses positional tracking of objects and observes natural features of the camera’s surroundings to align the user with respect to these features. While this method is both cheaper and far more flexible than either of those above, it lacks accuracy, can have a much higher latency than other methods of tracking, and requires that all processing is done solely by the headset.

J

K

L

Latency

Latency in virtual reality refers to a delay between user input (e.g., head, hand, or leg movements) and output (e.g., visual, haptic, positional, audio) caused by a mixture of technical problems that are likely to be eliminated as the technology advances. In its most common context, the delay between the user’s movement and the visualization in a game, for instance, latency is usually measured in milliseconds. High latency can cause VR sickness due to the extremely unnatural feeling of sight lagging behind the movement.

Locomotion

Locomotion refers to the means by which the user is able to move around within a VR environment. Most systems use some combination of three different types of locomotion: teleportation, transportation, and perambulation. Teleportation allows the user to point and click on a location to teleport there or select from a predefined list of locations to travel to, giving them a certain freedom of movement but no option for movement in between locations. Transportation makes the user a passenger in a vehicle or on an animal that moves along a predefined path, allowing them to move their head or hands, but making them unable to move away from their mode of transportation in any way beyond choosing a different object to follow. Perambulation uses handheld controllers, the HMD, or room-tracking to track the user’s movements and give them the ability to move as they would in the real world, though only to the extent allowed by a given system.

M

Mixed Reality

Mixed reality technology overlays artificial content onto the real world and enables the artificial content to interact with the real world scenery. Additionally, mixed reality allows overlaid content to be interacted with in real time, as it stays continually updated for interactivity. Essentially, mixed reality anchors interactive virtual objects to real world positions, modifying the user’s surroundings in limitless ways. Like augmented reality, mixed reality has many practical uses as it can modify the user’s perception of reality to be more conducive to their needs, i.e., making blueprints interactive before physical modeling or making unreachable parts of machinery visible and interactive. Mixed reality can be used to great effect in entertainment, as well, since it can enable the user to overlay board games, sports games, etc. onto any surface at any location.

Monoscopic Video

Monoscopic 180° and 360° videos are the most commonly produced VR videos. These are usually captured with a single camera per FOV and stitched together to make a single equirectangular video. In contrast to a flat, rectangular 2D video, the image appears as a spherical surface around the viewer. Using a motion sensor in the device, the FOV moves with the user’s head, giving off the illusion of being inside the sphere. When compared to stereoscopic video, on the other hand, the image seems completely flat since it has no depth information because it displays the same picture to both eyes. This does, however, make monoscopic videos easier and cheaper to produce than stereoscopic ones, since they need less equipment and only deal with one image at a time. Monoscopic videos can also be displayed on any device, including desktop computers (per drag control), mobile devices (via motion sensor), and HMDs, while stereoscopic videos can only be watched on HMDs.

N

O

Outside-in tracking

Inside-out and outside-in tracking refer to the 2 different approaches to monitoring the user’s movements outside of VR in order to mirror them inside of the VR environment. Outside-in tracking focuses on placing cameras, sensors, and other tracking devices around the user to cover whatever area is used to simulate the virtual box the user will interact with. These devices trace the locations of any moving objects in the scene and translate them into virtual movements. While this process has both high accuracy and low latency, it suffers from a hard limit to its range since the sensors don’t move, as well as an inability to record anything outside of the line of sight of the sensors. Meanwhile, inside-out tracking uses the sensors placed inside of the VR headgear exclusively. The headset’s position is marked and readjusted constantly to keep track of the current position of the user in the VR environment, and the camera(s) on the headset try to discern pre-placed markers out of the user’s real surroundings, which serve as reference points for the user’s movements. However, inside-out tracking can function without the use of markers, as well; markerless inside-out tracking uses positional tracking of objects and observes natural features of the camera’s surroundings in order to align the user with respect to these features. While this method is both cheaper and far more flexible than either of those above, it lacks accuracy, can have a much higher latency than other methods of tracking, and requires that all processing is done solely by the headset.

P

Parallax

Parallax describes the perceived movement of objects when the viewer moves, i.e., objects further away from the viewer seem to move more slowly in relation to the viewer’s position while objects closer to the viewer seem to move more quickly. This process allows people to gauge how far away an object is due to the overlapping (see: stereoscopic) fields of vision of the human eyes. In the same way, parallax is used to simulate the feeling of depth on a two-dimensional surface for use in 3D videos or VR.

Positional Audio

Positional audio is an audio technique that ties sounds to specific sources within an environment, realistically simulating the things the listener would hear from their point of view. This means that sounds will always come from the expected position relative to the listener; if the listener or the source of a sound is moved, the sound changes in volume and angle to reflect the move. Spatial audio is another technique meant to immerse the listener into their content. It refers to the process of changing the sounds the listener hears based on the position of their head. If the listener turns their head, the audio perspective shifts and sounds may be heard more or less clearly through each ear; if the user turns their left ear towards a sound, the sound’s volume will increase on the left-hand side of their head and decrease on the right-hand side. Effective application of both positional and spatial audio enables the listener to pinpoint the location of sound sources in an environment as well as experience realistic shifts in the volume and direction of sounds in line with their movements.

POV (point of view)

The point of view or POV is the reference point from which observations, calculations, and measurements take place; the location or position of the viewer/object in question. This is especially important when preparing Virtual Reality software for consumption, as the different points of view of each eye of each user need to be taken into account in order to provide a field of view as close as possible to their usual perspective. Since each eye has a different point of view, each one needs to be shown a different image at any given time, with a field of view slightly to the right/left of the other eye’s field of view. Taken together, these images provide the user with a similar perspective and sense of depth as if they were physically at the location of the scene on a 2D screen.

Q

R

Resolution (1080p - 16K)

Image resolution refers to the degree of detail an image holds, represented by the number of pixels. Higher resolutions make images sharper, as they increase the number of pixels used to represent images, which adds more detail to them. Screen size can drastically affect the sharpness of an image; if the screen is small enough, even low-resolution images can become nearly identical to far higher-resolution images, as the image is progressively less “pulled apart” as the size decreases and vice versa. Even the highest resolution can become blurry and out of focus when fitted to a large enough screen size.

The title ‘High Definition’ is given to a video format standard with 1080 vertical lines of pixels, usually with 1920 horizontal lines of pixels, as it usually implies an aspect ratio of 16 horizontal lines to every 9 vertical lines, creating a rectangular image. HD is distinct from the lower resolution 720-pixel format in that it is the next big ‘step up’ in resolution from. Videos and images in 2K resolution provide 2048 horizontal × 1080 vertical lines of pixels, and, since the horizontal pixels are the only changes between it and HD, it is simply a slightly wider version of an HD format. The standard known as 4K, however, contains many more pixels than either HD or 2K formats do, approximately quadrupling the amount of pixels of either format. In addition, 4K has two differing standards, one for videos, 4096×2160 pixels also known as Ultra-High-Definition, and one for television and computer monitors, 3840×2160 pixels.

It is important to note that on a VR device, the same image resolution results in a lower degree of detail and sharpness in comparison to a flat screen. There are two reasons for this effect, the first of which is that the spherical surface of a 180° or 360° image representation is much larger than it would be on a flat, rectangular screen, meaning that the same number of vertical and horizontal lines is stretched out over a wider area. The same concept applies to 2D versions of 180°/360° videos, as well. The second reason for the lower level of detail in VR is that in stereoscopic videos two separate images are rendered into a single video file to be displayed side-by-side for both eyes. As a result, the resolution from the perspective of each eye is only half of the actual image resolution.

Reticle

The reticle refers to a visual marker representing the user’s gaze in a 3D environment. It helps the user keep track of their object of focus, but can break immersion when used in an unsubtle or unnecessary manner.

Room-scale / Room tracking

Room-scale VR is a subcategory of VR that extends the VR environment to a room-sized setting. The user’s physical movements are tracked and reproduced inside of the virtual environment, allowing them to interact with objects in a much wider sphere of influence than stationary seated/standing VR would. The user can walk around and touch virtual objects anywhere in the space of the monitored room, giving them a “box” of freedom of movement in the artificial environment. To accurately record the user’s movements, special sensors and trackers are placed strategically to cover the entirety of the room. The VR gear carried by the user is used to calculate their movements relative to the rest of the room. This information is then used to place the user at the same point in the room in the VR environment as well as register and appropriately respond to the user’s attempted interactions with objects inside of the virtual box.

S

Side-by-side (SBS)

Side-by-side or SBS refers to a rendering method in which two separate camera recordings (often slightly different versions of the same recording) from a stereoscopic video are rendered in a single video file, with one recording mapped to the left eye and the other mapped to the right eye. On the viewer’s device, the two distinct images are directed individually to each eye to create the 3D effect.

Spherical Video

360° or 180° video that appears to be wrapped around the viewer in a sphere, adjusting the FOV according to head movements (via motion sensor in mobile devices or HMDs or drag control on desktop devices).

Stereoscopic Video

In stereoscopic videos, separate images are displayed to each eye, each with a slightly different perspective. Using two different images simulates normal human binocular vision and parallax, creating the illusion of depth in the image, which makes VR experiences feel much more immersive and natural than monoscopic videos. A common example for (non-omnidirectional) stereoscopic videos are 3D movies, which provide two perspectives on the content that is then filtered to the correct eye by the 3D glasses. The recording of 360° stereoscopic videos requires very different techniques from those used for 180° videos, and they can be captured in many different ways. They can be captured using two cameras, one camera per field of view, with each camera mapped to one eye, resulting in two omnidirectional 2D videos, each from a slightly different perspective. These are then combined in order to create a stereoscopic vision. Another technique uses a rig of cameras facing in every direction to record video from all around the location. The image for each eye is then extracted from a combination of all captured angles. In theory, the ideal rig would have a camera for every possible head orientation, but the number of physically captured views is limited to the number of cameras, so the missing angles have to be synthesized using a complex assembly software. The production of stereoscopic videos is much more challenging and cost intensive than that of 180° videos, as well. In particular, the stitching and assembly process is far more complex and requires a special skill set. Even minor flaws can result in poor video quality and artifacts and cause a great deal of discomfort for the viewer.

Stitching (Video)

Video stitching is a technique used to produce large or high-resolution images. It takes multiple different overlapping images from separate points of view and ‘stitches’ them together to form one composite image. These images need to have an almost exact overlap with each other and be taken around the same time to maintain cohesiveness and avoid parallax, distortion, or light intensity issues. After taking all of these different images in, a program will then map each pixel from one image to another and align all of them into one coherent picture. This is an essential part of 180°, 360° and VR videos, as these require many different images from all angles and viewpoints to be stitched together to recreate every potential point of view in the entire area visible to the viewer.

T

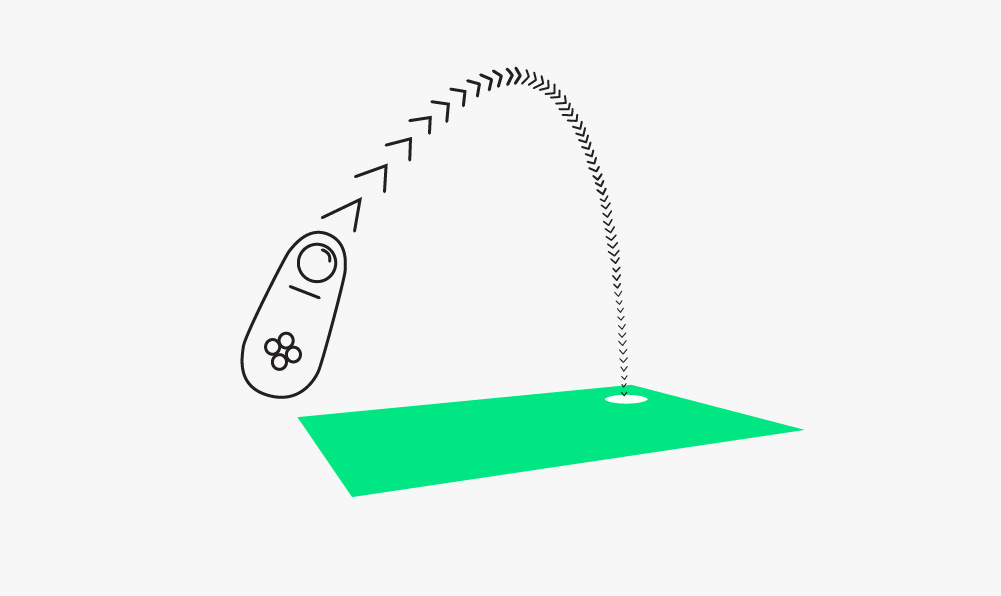

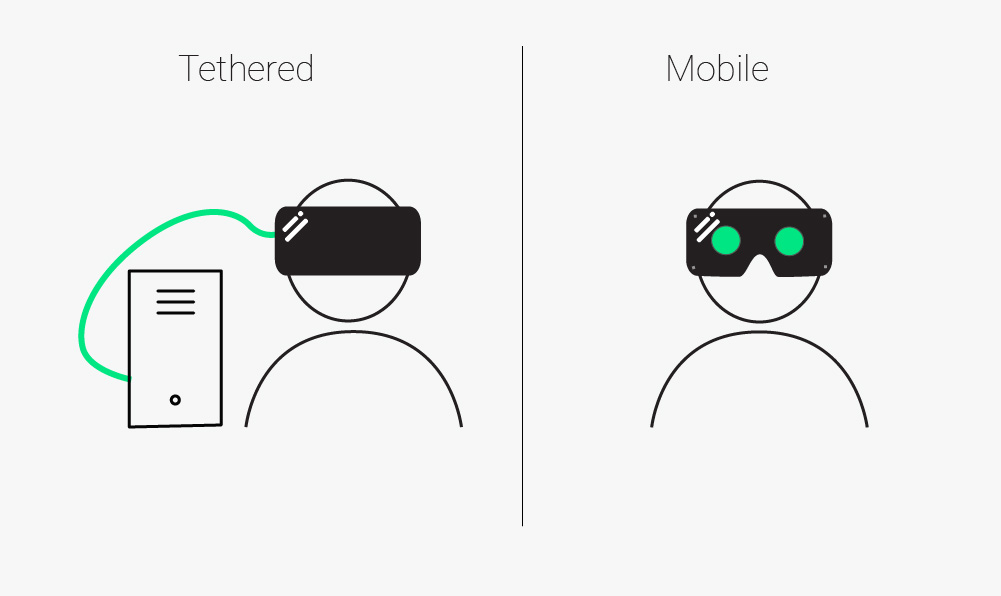

Tethered headset / Mobile Headset

There are currently two different types of headsets for VR: tethered and mobile. Tethered headsets are physically connected to a powerful computer using wires, allowing them to make use of the processing power available to track positions, movements, etc. Tethered headsets give the user a freedom of movement beyond turning their heads and additional interactivity. However, they require the user to stay within a certain distance of the computer, as the user’s headset must stay connected physically to the computer, and are relatively expensive compared to their mobile counterparts. Mobile headsets can be taken anywhere, since they do not require a physical connection to a processor, and are far cheaper than tethered headsets. While they don’t limit the movements of the user, their lack of additional computing power makes them unable to react to the user’s movements and limits them to look around the environment without interacting with or moving around it.

U

V

Virtual Reality

Virtual reality or VR is a technology that, unlike augmented reality, creates an artificial world for the user to experience. It generates an interactive 3D space around the user that can be modified at will, allowing them to experience movies, games, or otherwise unreachable places or events as if they were really there. Virtual reality aims to artificially transport the user to a different, wholly virtual place, allowing the user to interact with this synthetic environment as if they were really present. In order to maintain an immersive virtual reality environment without leaving the user feeling disoriented, nauseous, or uncomfortable, many different criteria have to be met, including frame rate minimums, resolution minimums, and an at least mostly unrestricted field of view.

Virtual reality sickness

Virtual reality sickness is a feeling of discomfort or disorientation that can occur when experiencing virtual environments. There are several theories on its causes, mostly related to the small but noticeable gap between virtual reality and reality. One theory is that the difference between the actions of the user in real life and what they see in VR gives them the illusion of motion where there is no real motion, causing a mismatch between what they see and what the brain expects to see. Another theory is that the technology is not accurately simulating reality, that the latency is too high, the FOV is the wrong shape or size, etc. Depending on the individual threshold of the user for these discrepancies, consequences can range from disorientation, headache, discomfort to stomach awareness, drowsiness, nausea and vomiting. Virtual reality sickness can be a major issue preventing users from enjoying their experience and, because of this, developers and engineers are always keen to reduce inaccuracies and eliminate errors by devising ingenious software solutions as well as constantly improving VR technology.

W

WebGL

WebGL is a JavaScript-based API that enables browser-based 3D rendering without plugins in an HTML page, allowing web designers to, for example, render an entire 3D VR world all in their own browser.

WebVR

WebVR is an emerging JavaScript-based API supporting a variety of virtual reality devices to allow more people to experience VR content in traditional web browsing interfaces. The great advantage is that beyond the web browser no further plug-ins or apps are required to view and design VR content. The API is under continuous improvement thanks to the efforts of developers working for Mozilla and Google. The rendering of the VR content is based on the related WebGL API.